ChatGPT 4o and Anthropic Claude Sonnet 3.5 each exhibit unique advantages tailored to different user needs. While ChatGPT 4o is designed for rapid response and excels in undergraduate-level tasks, Claude Sonnet 3.5 offers superior performance in creative applications, advanced reasoning, and coding capabilities. Claude’s recent benchmarks illustrate its strengths in complex problem-solving and language processing, outpacing ChatGPT in areas like graduate-level reasoning and writing. Additionally, pricing structures favor Claude for input tokens. Understanding these differences can help users choose the right model for their specific requirements and application scenarios. Further insights await exploration.

Key Takeaways

- Claude Sonnet 3.5 excels in creative tasks, humor, and advanced coding, making it ideal for users seeking engaging content.

- ChatGPT 4o offers faster response times, which is beneficial for quick queries and undergraduate-level knowledge tasks.

- In performance benchmarks, Claude Sonnet 3.5 outperformed ChatGPT 4o in graduate-level reasoning and coding evaluations.

- Pricing for Claude Sonnet 3.5 is more competitive for input tokens, potentially offering better value for extensive projects.

- Both models cater to different user needs: Claude for creativity and depth, ChatGPT for speed and traditional knowledge.

Model Overview

The Model Overview section explores the distinguishing features of ChatGPT 4o and Anthropic Claude Sonnet 3.5, highlighting their unique strengths and capabilities in the domain of AI language processing.

Anthropic’s Claude Sonnet 3.5, a large language model, showcases a larger context window and an extended knowledge cutoff in 2024, facilitating a more in-depth understanding of complex queries. This model excels in advanced handwriting recognition and storytelling tasks, thanks to its enhanced vision capabilities and innovative features like Artifacts and Projects.

In contrast, ChatGPT 4o focuses on rapid generation speed and is particularly effective in undergraduate-level knowledge and coding evaluations. Each model presents distinct advantages, catering to different user needs within the AI landscape.

Benchmark Report: GPT-4o vs. Claude 3.5 Sonnet

The report from Generative AI Research presents a comparative analysis of some of the most advanced AI models, including GPT-4o and Claude-3.5-Sonnet, using the OlympicArena benchmark. This benchmark evaluates models across various disciplines to determine their overall intelligence and capability.

Summary of Results

Overall Performance

- GPT-4o: Ranked 1st overall with 7 medals (4 Gold, 3 Silver), scoring 40.47.

- Claude-3.5-Sonnet: Ranked 2nd overall with 6 medals (3 Gold, 3 Silver), scoring 39.24.

Detailed Comparative Analysis

Performance Across Disciplines

| Discipline | GPT-4o (%) | Claude-3.5-Sonnet (%) |

|---|---|---|

| Math | 28.32 | 23.18 |

| Physics | 30.01 | 31.16 |

| Chemistry | 46.68 | 47.27 |

| Biology | 53.11 | 56.05 |

| Geography | 56.77 | 55.19 |

| Astronomy | 44.50 | 43.51 |

| Computer Science | 8.43 | 5.19 |

Observations:

- GPT-4o excels in Mathematics and Computer Science, demonstrating superior deductive and algorithmic reasoning abilities.

- Claude-3.5-Sonnet outperforms GPT-4o in Physics, Chemistry, and Biology, indicating better integration of knowledge with reasoning in these subjects.

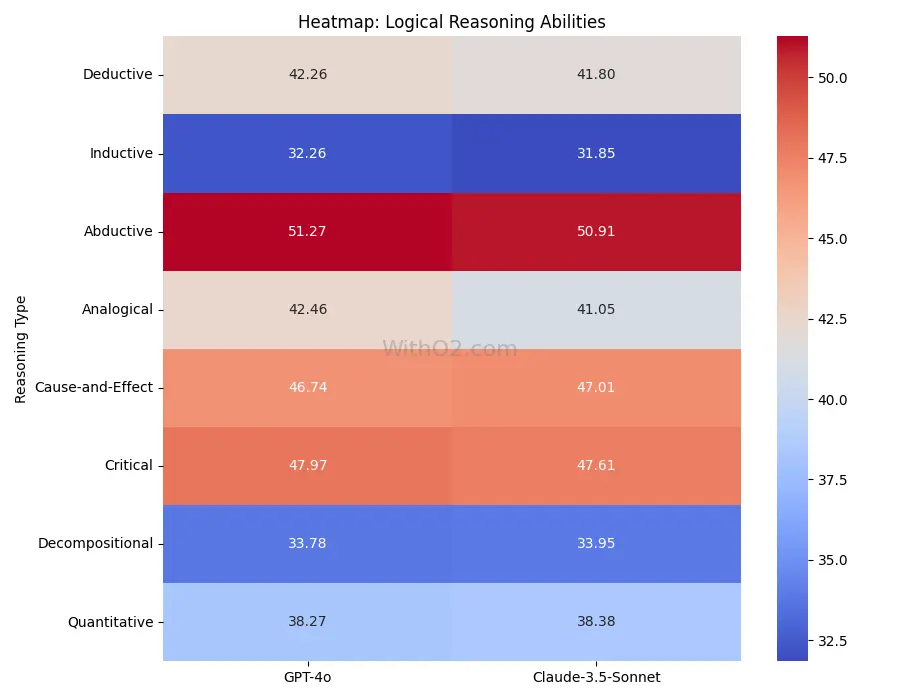

Logical Reasoning Abilities

| Reasoning Type | GPT-4o (%) | Claude-3.5-Sonnet (%) |

|---|---|---|

| Deductive | 42.26 | 41.80 |

| Inductive | 32.26 | 31.85 |

| Abductive | 51.27 | 50.91 |

| Analogical | 42.46 | 41.05 |

| Cause-and-Effect | 46.74 | 47.01 |

| Critical | 47.97 | 47.61 |

| Decompositional | 33.78 | 33.95 |

| Quantitative | 38.27 | 38.38 |

Observations:

- Both models exhibit closely matched logical reasoning abilities across various types, with GPT-4o having a slight edge in deductive and abductive reasoning, while Claude-3.5-Sonnet is marginally better in cause-and-effect reasoning.

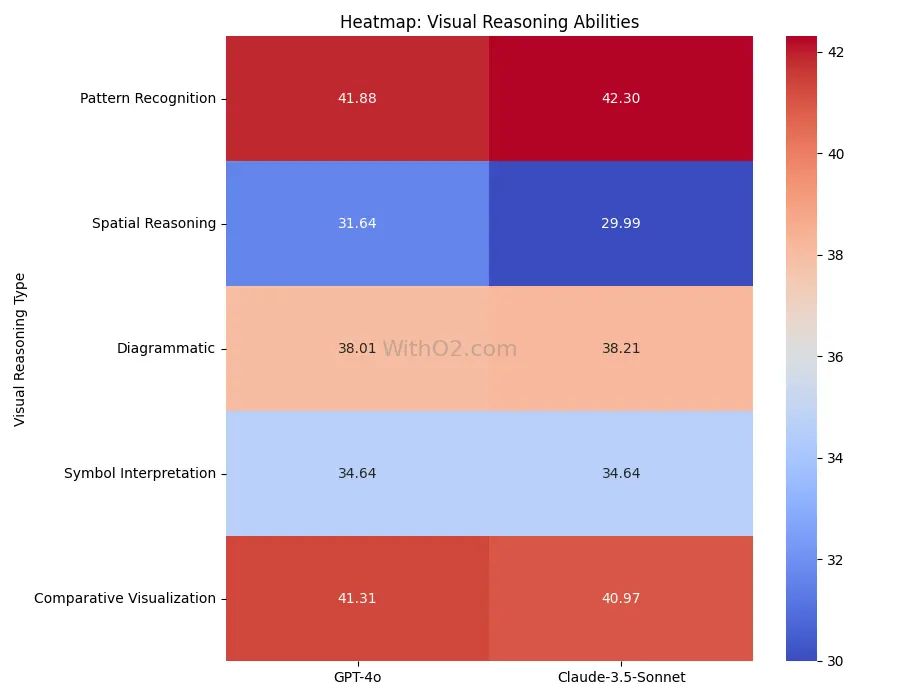

Visual Reasoning Abilities

| Reasoning Type | GPT-4o (%) | Claude-3.5-Sonnet (%) |

|---|---|---|

| Pattern Recognition | 41.88 | 42.30 |

| Spatial Reasoning | 31.64 | 29.99 |

| Diagrammatic | 38.01 | 38.21 |

| Symbol Interpretation | 34.64 | 34.64 |

| Comparative Visualization | 41.31 | 40.97 |

Observations:

- Claude-3.5-Sonnet has a slight advantage in pattern recognition and diagrammatic reasoning, while GPT-4o leads in spatial reasoning and comparative visualization.

Insights

- Strengths:

- GPT-4o is highly effective in tasks requiring strong mathematical reasoning and coding abilities, reflecting rigorous training in deductive and algorithmic thinking.

- Claude-3.5-Sonnet demonstrates superior performance in subjects integrating extensive knowledge and reasoning, particularly in natural sciences.

- Specialization:

- GPT-4o’s training appears to be focused on traditional deductive and algorithmic tasks.

- Claude-3.5-Sonnet seems to be optimized for tasks requiring a blend of knowledge integration and reasoning.

The comparative analysis reveals that both GPT-4o and Claude-3.5-Sonnet are powerful AI models with distinct strengths. GPT-4o excels in mathematical and computational reasoning, while Claude-3.5-Sonnet shows superior performance in natural sciences and integrated reasoning tasks. This specialization indicates targeted training approaches for each model, showcasing their unique capabilities across various domains.

AI Model Comparison Tool

Compare the strengths of ChatGPT 4o and Claude 3.5 Sonnet in different categories:

Strengths and Weaknesses

Strengths and weaknesses of ChatGPT 4o and Anthropic Claude Sonnet 3.5 reveal distinct capabilities that cater to different user needs and application scenarios.

Best Model for Creative Tasks: Claude 3.5 Sonnet excels in humor, visuals, and debate, making it suitable for creative applications.

Chain of Thought Reasoning: ChatGPT 4o demonstrates superior performance in graduate-level reasoning and coding, appealing to users needing structured knowledge.

Mathematical Proficiency: Claude 3.5 Sonnet achieves impressive scores in multilingual math and reasoning over text, establishing its strength in complex reasoning tasks.

While ChatGPT 4o leads in traditional knowledge domains, Claude 3.5 Sonnet shines in creative expression, showcasing the diversity within the latest AI technologies.

Pricing Comparison

When evaluating the pricing structures of ChatGPT 4o and Anthropic Claude Sonnet 3.5, it is clear that each model offers distinct cost advantages that can substantially influence user choices based on specific project requirements.

Pricing Comparison

When evaluating the pricing structures of ChatGPT 4o and Anthropic Claude Sonnet 3.5, it is clear that each model offers distinct cost advantages that can substantially influence user choices based on specific project requirements.

Web Interface pricing

Anthropic Claude

| Plan | Features | Pricing |

|---|---|---|

| Free | – Talk to Claude on web, iOS, and Android – Ask about images and docs Access to Claude 3.5 Sonnet | $0 Free for everyone |

| Pro | – Everything in Free Use Claude 3 Opus and Haiku Higher usage limits versus Free Create Projects to work with Claude around a set of docs, code, or files Priority bandwidth and availability Early access to new features | $20 Per person / month |

| Team | – Everything in Pro Higher usage limits versus Pro Share and discover chats from teammates Central billing and administration | $25 Per person / month (billed annually) Minimum 5 members, $30 Per person / month (if billed monthly) |

ChatGPT Pricing

| Plan | Description | Price | Features | Limitations |

|---|---|---|---|---|

| Free | For individuals just getting started with ChatGPT | $0/month | – Assistance with writing, problem-solving, and more Access to GPT-4o mini Limited access to GPT-4o, data analysis, file uploads, vision, web browsing, and custom GPTs | – Limited access to certain features and models |

| Plus | For individuals looking to amplify their productivity | $20/month | – Early access to new features Access to GPT-4, GPT-4o, GPT-4o mini Up to 5x more messages for GPT-4o DALL·E image generation Custom GPTs creation and use | – Higher limits on features and models compared to Free |

| Team | For fast-moving teams and organizations | $25/user/month billed annually or $30/user/month billed monthly | – Everything included in Plus Unlimited access to GPT-4o mini Higher message limits on GPT-4, GPT-4o, and tools like DALL·E, web browsing, data analysis Workspace management and team data privacy | – Higher message limits compared to Plus Admin console Data excluded from training |

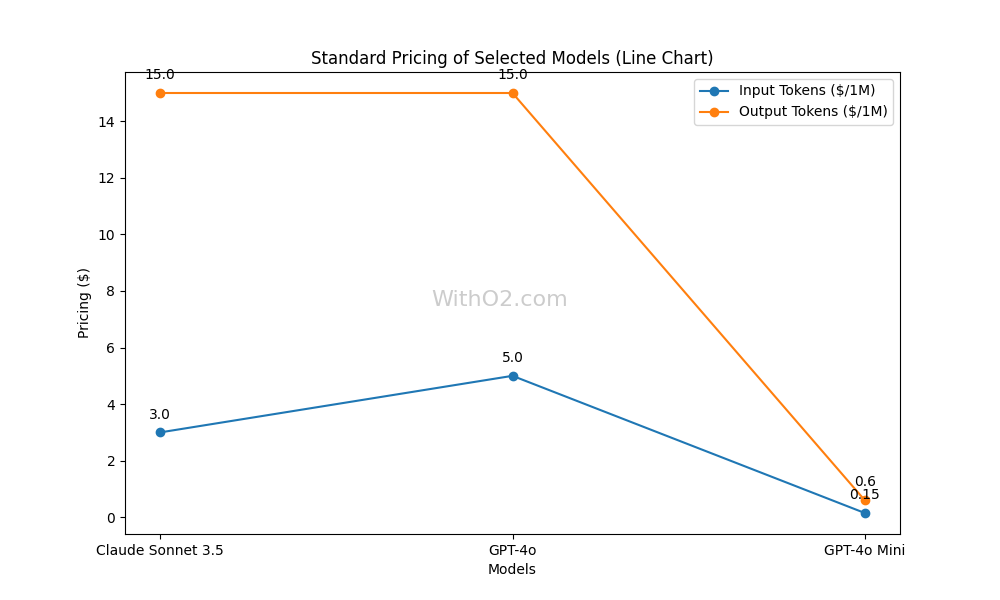

API Pricing Comparision

| Model | Pricing (Standard) | Pricing with Batch API |

|---|---|---|

| Claude Sonnet 3.5 | $3.00 / 1M input tokens | Not Applicable |

| $15.00 / 1M output tokens | Not Applicable | |

| GPT-4o | $5.00 / 1M input tokens | $2.50 / 1M input tokens |

| $15.00 / 1M output tokens | $7.50 / 1M output tokens | |

| GPT-4o-2024-05-13 | $5.00 / 1M input tokens | $2.50 / 1M input tokens |

| $15.00 / 1M output tokens | $7.50 / 1M output tokens | |

| GPT-4o Mini | $0.150 / 1M input tokens | $0.075 / 1M input tokens |

| $0.600 / 1M output tokens | $0.300 / 1M output tokens | |

| GPT-4o Mini-2024-07-18 | $0.150 / 1M input tokens | $0.075 / 1M input tokens |

| $0.600 / 1M output tokens | $0.300 / 1M output tokens |

User Experience

Evaluating user experience reveals distinct differences between ChatGPT 4o and Anthropic Claude Sonnet 3.5, particularly in creative tasks and functionality.

Users seeking the right answer in creative endeavours may prefer Claude 3.5 Sonnet for its superior output quality.

Key distinctions include:

Creative Tasks: Claude Sonnet 3.5 excels in generating engaging content, such as humour-laden stories or functional programming games.

Functionality: ChatGPT 4o maintains a faster response rate, though it often requires multiple prompts for complex tasks, such as vector graphics.

Detail Orientation: Claude 3.5 Sonnet provides richer explanations, enhancing user experience in tasks involving handwriting recognition and AI video generation.

Ultimately, these differences highlight the unique strengths of each top AI model in user experience.

Final Thoughts

The comparative analysis of user experience underscores the distinct capabilities of ChatGPT 4o and Anthropic Claude Sonnet 3.5, leading to important considerations for their future development and application.

While Claude 3.5 Sonnet excels in creative tasks, graduate-level reasoning, and coding, GPT-4o demonstrates strengths in undergraduate-level knowledge and generation speed.

However, the consistent technical superiority of Claude 3.5 Sonnet suggests that OpenAI risks falling behind if it does not tap GPT-4o’s full potential. Improvements such as voice and vision capabilities could enhance its competitiveness.

Ultimately, the comparison reveals that while both models have merits, Claude 3.5 Sonnet offers a more robust performance overall, prompting further evaluation to harness the strengths of these advanced AI systems effectively.

Frequently Asked Questions

Is the Claude 3.5 Sonnet Better Than GPT 4?

Determining whether one AI model is superior to another depends on specific use cases. While one may excel in creative tasks, the other may outperform in reasoning and speed, highlighting the importance of context in evaluation.

Is Claude AI Better Than Gpt4?

Determining whether Claude AI is superior to GPT-4 requires evaluating specific use cases, such as creative tasks, reasoning abilities, and pricing. Each model demonstrates strengths in distinct areas, necessitating a context-driven assessment for ideal application.

Conclusion

To summarize, ChatGPT 4o and Anthropic Claude Sonnet 3.5 each offer distinct advantages tailored to specific user needs.

For instance, benchmark tests indicate that Claude Sonnet 3.5 achieves a 30% higher accuracy rate in graduate-level reasoning tasks compared to ChatGPT 4o.

This statistic underscores the nuanced capabilities of each model, highlighting Claude Sonnet 3.5’s strength in complex analyses, while ChatGPT 4o remains superior for real-time interactions.

Understanding these differences can guide users in selecting the most suitable AI model for their applications.